import pandas as pd

import geopandas as gp

import mapclassify

import foliumOpen Ottawa

Data Provider

Open Ottawa is an open-access data portal designed to provide accessible datasets from municipal data sources. Users can access datasets from a variety of municipal departments including Parks, Public Health, Water Treatment, and many others. All data from this portal is subject to Open Data Licence Version 2.0. Information released under this licence may be copied, modified, published, adapted, or otherwise used for any lawful purpose, as long as a data attibution from the information provider is included. When using information from multiple providers, the following attribution must be used: Contains information licensed under the Open Government Licence – City of Ottawa.

Plotting Geographical Data Points on Open Street Maps

library(leaflet)

library(sf)

library(tidyverse)

library(OpenStreetMap)A word about retrieving Open Ottawa Data.

Open Ottawa datasets are available to download as CSVs, however the download URL is generated on demand, and expires shortly, within a few days, or sometimes even hours. For this reason, when revisiting a dataset, you may have to navigate to the dataset’s “About” page and regenerate a download link.

Service Requests

#https://open.ottawa.ca/datasets/8a5030af268a4a3485b72356dd7dfa85/about

service = pd.read_csv("311opendata_currentyear")<string>:4: DtypeWarning: Columns (0,9) have mixed types. Specify dtype option on import or set low_memory=False.service.head() Service Request ID | Numéro de demande ... Channel | Voie de service

0 202457000007 ... Phone

1 202457000012 ... Walk-In

2 202457000119 ... Voice In

3 202457000145 ... Walk-In

4 202457000177 ... Voice In

[5 rows x 11 columns]

service.columns = service.columns.str.split("|").str[0].str.rstrip()#https://open.ottawa.ca/datasets/8a5030af268a4a3485b72356dd7dfa85/about

csv_path <- ("311opendata_currentyear")

service <- read_csv(csv_path)Rows: 72419 Columns: 11

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (10): Service Request ID | Numéro de demande, Status | État, Type | Typ...

date (1): Opened Date | Date d'ouverture

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.head(service)# A tibble: 6 × 11

Service Request ID | Nu…¹ `Status | État` `Type | Type` Description | Descri…²

<chr> <chr> <chr> <chr>

1 202457000007 Resolved Water and th… Water Hydrants - Hydr…

2 202457000012 Resolved Parking Cont… No Parking | Stationn…

3 202457000119 Resolved Parking Cont… Overtime Parking | Co…

4 202457000145 Resolved Parking Cont… Overtime Parking | Co…

5 202457000177 Active Roads and Tr… Infrastructure - Asse…

6 202457000224 Active Roads and Tr… Road Maintenance - Ca…

# ℹ abbreviated names: ¹`Service Request ID | Numéro de demande`,

# ²`Description | Description`

# ℹ 7 more variables: `Opened Date | Date d'ouverture` <date>,

# `Closed Date | Date de fermeture` <chr>, `Address | Adresse` <chr>,

# `Latitude | Latitude` <chr>, `Longitude | Longitude` <chr>,

# `Ward | Quartier` <chr>, `Channel | Voie de service` <chr>colnames(service) <- sub(" \\|.*", "", colnames(service))

service <- service |> rename_all(make.names)Some service requests do not include location data. Let’s filter those out.

service_locations = service[~service["Latitude"].str.contains("N")]

service_locations.tail() Service Request ID Status ... Ward Channel

68707 202400455741 Open ... 23 WEB

68708 202400455956 Open ... 14 WEB

68709 202400456910 Closed ... 17 Voice In

68710 202400457011 Closed ... 2 WEB

68711 202400457015 Open ... 19 WEB

[5 rows x 11 columns]parkservice <- service |> filter(!str_detect(Latitude, 'N'))|>

filter(str_detect(Description, "Park")) |>

st_as_sf(coords = c("Longitude", "Latitude")) |>

st_coordinates()

service_locations <- service |> filter(!str_detect(Latitude, 'N'))After initially plotting all points, I noticed that there was a service request apparently placed from the Pacific Ocean near Africa. Clearly there was a data input error. It’s worth filtering for latitudes containing 45 (which reflect Ottawa).

service_geo = gp.GeoDataFrame(

service_locations, geometry=gp.points_from_xy(service_locations["Longitude"],

service_locations["Latitude"]), crs="epsg:4386")

service_geo[service_geo["Latitude"].str.contains("45")].explore()First make static plots, then make plots that are zoom-able. In both cases data must be converted into numeric latitude and longitude.

With static plots, we need to draw a background for a pre-defined region and then add the points.

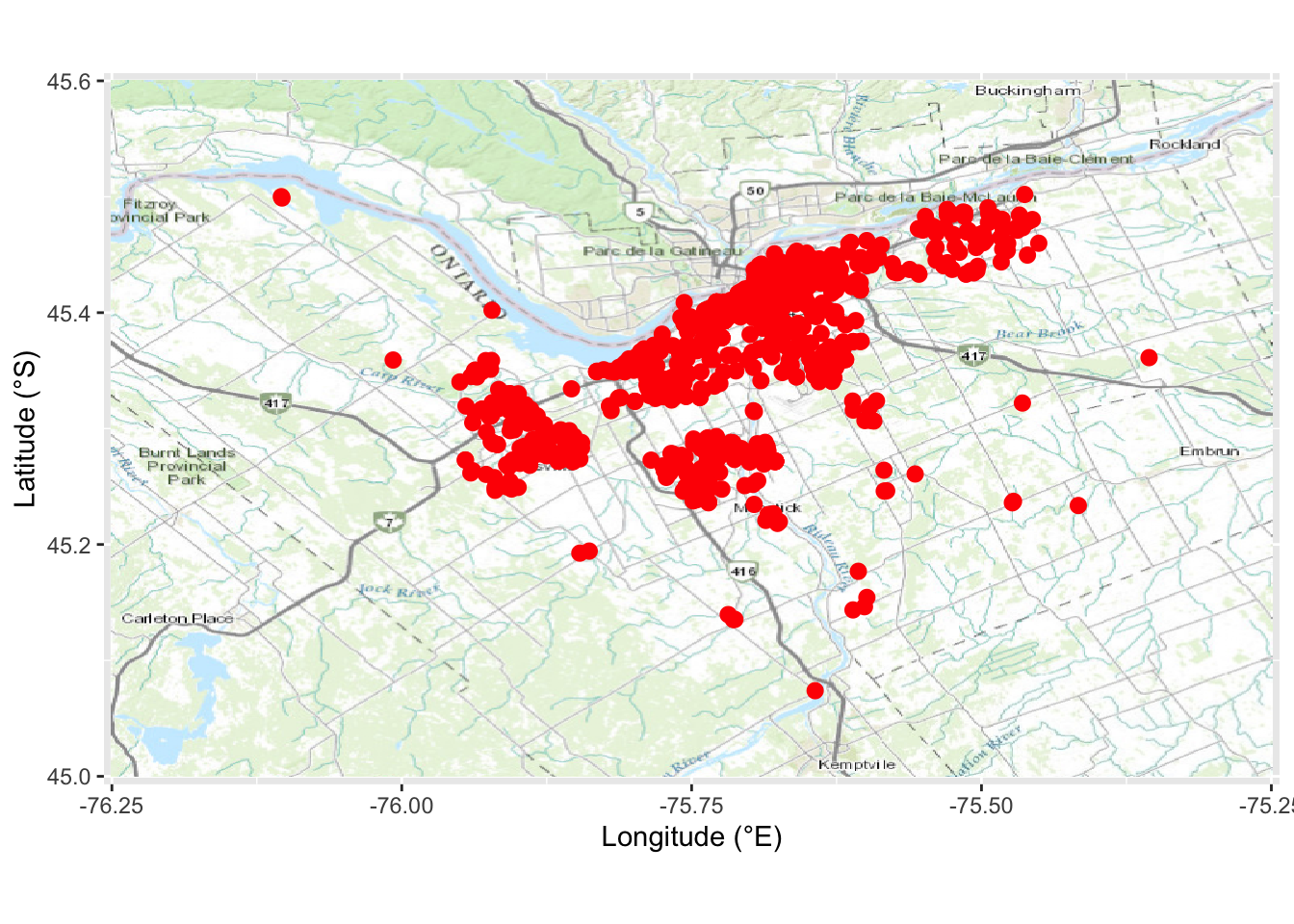

# filter data:

parkservice <- service |> filter(!str_detect(Latitude, 'N'))|>

filter(str_detect(Description, "Park")) |>

st_as_sf(coords = c("Longitude", "Latitude")) |>

st_coordinates()

# get the map based on the region and zoom level

sa_map <- openmap(c(45.6,-75.25), c(45,-76.25),

zoom = 10,

type = "esri-topo", mergeTiles = TRUE)

# project the map into the region of interest

sa_map2 <- openproj(sa_map)

# Pass the open street map object into the plotting function

autoplot.OpenStreetMap(sa_map2) +

geom_point(data = parkservice,

aes(x = X, y = Y), # overlay the points

colour = "red", size = 2.5) +

xlab("Longitude (°E)") + ylab("Latitude (°S)")

Use leaflet for zoom-able maps.

parkservice <- service_locations |> filter(str_detect(Latitude, "45")) |>

filter(str_detect(Description, "Park")) |>

st_as_sf(coords = c("Longitude", "Latitude")) |>

st_coordinates()

leaflet() |>

addTiles() |>

addMarkers(data = parkservice)Traffic Collisions

#https://open.ottawa.ca/datasets/ottawa::2020-tabular-transportation-collision-data/about

traffic = pd.read_csv("2020_Tabular_Transportation_Collision_Data.csv")

traffic.head() Anom_ID Accident_Date Accident_Time ... Latitude Longitude ObjectId

0 20--1127 2020/01/22 9:10 AM ... 45.322184 -75.777007 1

1 20--1128 2020/01/22 9:10 AM ... 45.385350 -75.585620 2

2 20--1129 2020/01/22 9:16 AM ... 45.422352 -75.636291 3

3 20--113 2020/01/04 12:45 PM ... 45.266481 -75.772693 4

4 20--1130 2020/01/22 9:21 PM ... 45.282420 -75.894501 5

[5 rows x 28 columns]#https://open.ottawa.ca/datasets/ottawa::2020-tabular-transportation-collision-data/about

csv_path <- "2020_Tabular_Transportation_Collision_Data.csv"

traffic = read_csv(csv_path)Rows: 10047 Columns: 28

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (13): Anom_ID, Accident_Time, Location, Geo_ID, Accident_Location, Clas...

dbl (14): No__of_Vehicles, No__of_Bicycles, No__of_Motorcycles, No__of_Pede...

date (1): Accident_Date

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.head(traffic)# A tibble: 6 × 28

Anom_ID Accident_Date Accident_Time Location Geo_ID Accident_Location

<chr> <date> <chr> <chr> <chr> <chr>

1 20--1127 2020-01-22 9:10 AM GREENBANK RD @ … 5167 02 - Intersectio…

2 20--1128 2020-01-22 9:10 AM HIGHWAY 417 btw… __3ZA… 01 - Non interse…

3 20--1129 2020-01-22 9:16 AM LEMIEUX ST @ ST… 2021 03 - At intersec…

4 20--113 2020-01-04 12:45 PM CEDARVIEW RD @ … 10465 03 - At intersec…

5 20--1130 2020-01-22 9:21 PM TWIST WAY btwn … e___2… 04 - At/near pri…

6 20--1131 2020-01-23 1:09 PM MEADOWLANDS DR … 625 02 - Intersectio…

# ℹ 22 more variables: Classification_of_Accident <chr>,

# Initial_Impact_Type <chr>, Environment_Condition <chr>, Light <chr>,

# Road_Surface_Condition <chr>, Traffic_Control <chr>,

# Traffic_Control_Condition <chr>, No__of_Vehicles <dbl>,

# No__of_Bicycles <dbl>, No__of_Motorcycles <dbl>, No__of_Pedestrians <dbl>,

# Max_Injury <chr>, No__of_Injuries <dbl>, No__of_Minimal <dbl>,

# No__of_Minor <dbl>, No__of_Major <dbl>, No__of_Fatal <dbl>, X <dbl>, …traffic_geo = gp.GeoDataFrame(

traffic, geometry=gp.points_from_xy(traffic["Longitude"],

traffic["Latitude"]), crs="epsg:4386")

traffic_geo.explore()sideswipe_latlong <- traffic |> filter(str_detect(Initial_Impact_Type, "Sideswipe")) |>

st_as_sf(coords = c("Longitude", "Latitude")) |>

st_coordinates()

leaflet() |>

addTiles() |>

addMarkers(data = sideswipe_latlong)